Fortune News | May 13,2023

Jun 3 , 2023

By Kirubel Tadesse

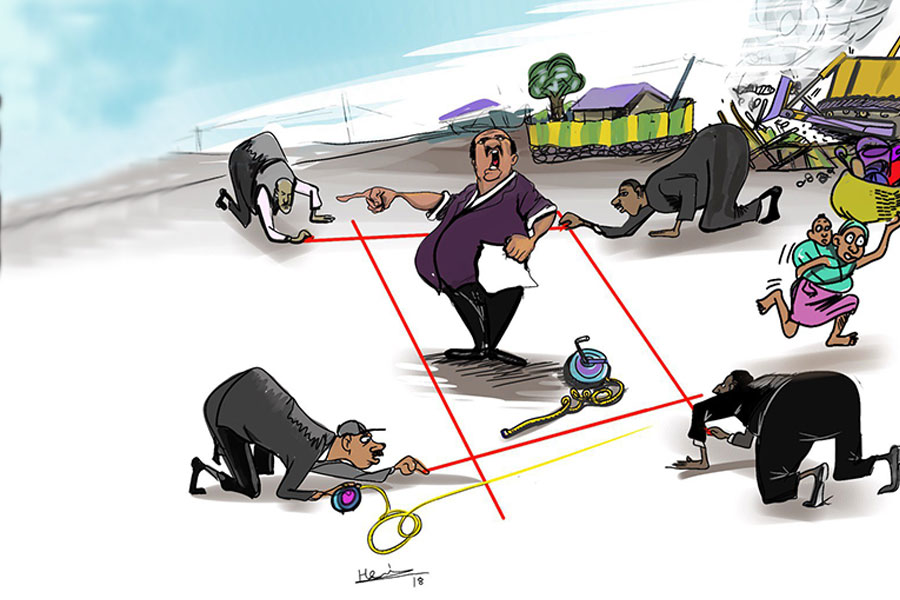

The 17th Internet Governance Forum, a United Nations initiative that champions international cooperation to enhance Internet accessibility, was inaugurated by Ethiopia's Prime Minister Abiy Ahmed (PhD) in November last year. A hint of irony coated the event, hosted by a country notorious for its extensive internet shutdowns and social media restrictions in favour of its ruling party.

In his opening speech, the Prime Minister bewailed the Internet’s role in disseminating misinformation during the recent civil war in Ethiopia, deftly sidestepping his government’s digital transgressions. Led by Abiy and his Prosecutor General, Gideon Timothewos (PhD), the government has routinely shown disdain for digital rights; their actions make previous leaders, Meles Zenawi and Hailemariam Desalegn, seem positively libertarian.

The frequent Internet blackouts, a severe drain on the country's coffers, are protested by several domestic and international bodies. Nevertheless, the appeals seem to fall on deaf ears.

Social media’s role in the conflict should not be overlooked either. The bloody civil war in Tigray and the neighbouring states, which only ended last year through a US.-backed truce, was exacerbated by the unchecked use of social media platforms, especially Facebook. Reports suggest these platforms were used to coordinate attacks, spread hate speech, and ignite ethnic violence.

The spotlight was thrown on Facebook's role in Ethiopia’s civil war by Frances Haugen, a former Facebook employee turned whistleblower, who claimed in her 2021 testimony to Congress that the social media giant was exacerbating ethnic conflict in Ethiopia. While Facebook has faced flak for its questionable content moderation policies and practices, especially from the African user base, the issue extends beyond the realm of regional markets and into matters of international concern.

Protection granted by the Communication Decency Act under Section 230 of the 1996 US law has shielded Facebook from legal repercussions for its content moderation failings. However, with the mounting evidence of Facebook-linked political violence in Ethiopia, it is time for the US to reassess its regulatory approach.

Rethinking Section 230 could instigate essential reform. Amending it to introduce penalties for negligent content moderation could serve as a deterrent. An additional proposal involves establishing a multi-stakeholder content governance mechanism, including the participation of Facebook, national governments, and international institutions, to ensure social media platforms adhere to human rights agreements.

While voluntary participation presents its own challenges, this mechanism could provide a platform for legitimate grievances to be aired and addressed.

The necessity to strike a balance between online freedom of speech and the prevention of harm has never been more pressing. The onus lies not just on Facebook and other social media platforms but also on international regulatory bodies and national governments to find this equilibrium and uphold the integrity of our digital world.

However, it must be emphasized that these proposals are not a magic bullet. They are potential solutions to a deep-rooted and complex problem that requires ongoing and multi-faceted effort. Facebook’s global influence extends far beyond its American base, as does its potential fallout from its failure to effectively manage harmful content. An international approach to this issue is essential.

Facebook's seeming disregard for content moderation has ignited a firestorm, casting a damning spotlight on the intersection of technological ingenuity and ethical responsibility. With its staggering 2.9 billion users globally and 8.4 million in Ethiopia alone, its content moderation and governance strategies have become subjects of critical scrutiny as they shape global digital discourse and can potentially incite offline violence.

Examining the company’s systems paints a disquieting picture of a tech behemoth unable to regulate its digital realm effectively. Despite Facebook's insistence that its content moderation combines human efforts with algorithmic intelligence, it struggles to moderate inflammatory content in non-English languages. While Facebook claims an AI success rate of 97pc for hate speech removal, the reality in Ethiopia contradicts such assurances, with daily posts violating Facebook's "Community Standards," thus potentially inciting offline harm.

Whistleblower Haugen’s testimony was an unambiguous indictment of Facebook's role in fanning ethnic violence in Ethiopia, a charge Facebook has repudiated. However, independent researchers have raised alarms about the company’s inability to filter hate speech effectively. Global Witness reported a shocking loophole in Facebook's content moderation — posts containing hate speech that was previously removed were approved for publication when submitted as ads.

The stakes and the potential costs of inaction are high for human lives and social cohesion. As Facebook expands its global reach, it is incumbent upon both the platform and policymakers to ensure that technological innovation is balanced with ethical responsibility and that the "town square" of the 21st Century does not become a stage for conflict and division. It may sound daunting, but it is within our grasp with coordinated international effort.

Facebook’s moderation policies have gained prominence not just for their potential to prevent the incitement of violence but also as a test case for balancing the necessity of open discourse and the imperative of security. However, critics charge that Facebook's approach to content moderation is flawed, especially in less influential markets, where users seem to be over-censored and exposed to harmful content.

Facebook must recognise that its current approach is insufficient and inconsistent, especially in countries where its content moderation falls woefully short. As the recent history in Ethiopia painfully illustrates, the company's failures are more than just public relations disasters; they are literally a matter of life and death. Its response should be proportionate to the magnitude of the challenge at hand.

PUBLISHED ON

Jun 03,2023 [ VOL

24 , NO

1205]

Fortune News | May 13,2023

Fortune News | Apr 20,2019

Editorial | Sep 02,2023

Radar | Dec 19,2020

Commentaries | Feb 15,2020

Photo Gallery | 174652 Views | May 06,2019

Photo Gallery | 164876 Views | Apr 26,2019

Photo Gallery | 155091 Views | Oct 06,2021

My Opinion | 136700 Views | Aug 14,2021

Editorial | Oct 11,2025

Dec 22 , 2024 . By TIZITA SHEWAFERAW

Charged with transforming colossal state-owned enterprises into modern and competitiv...

Aug 18 , 2024 . By AKSAH ITALO

Although predictable Yonas Zerihun's job in the ride-hailing service is not immune to...

Jul 28 , 2024 . By TIZITA SHEWAFERAW

Unhabitual, perhaps too many, Samuel Gebreyohannes, 38, used to occasionally enjoy a couple of beers at breakfast. However, he recently swit...

Jul 13 , 2024 . By AKSAH ITALO

Investors who rely on tractors, trucks, and field vehicles for commuting, transporting commodities, and f...

Oct 11 , 2025

Ladislas Farago, a roving Associated Press (AP) correspondent, arrived in Ethiopia in...

Oct 4 , 2025

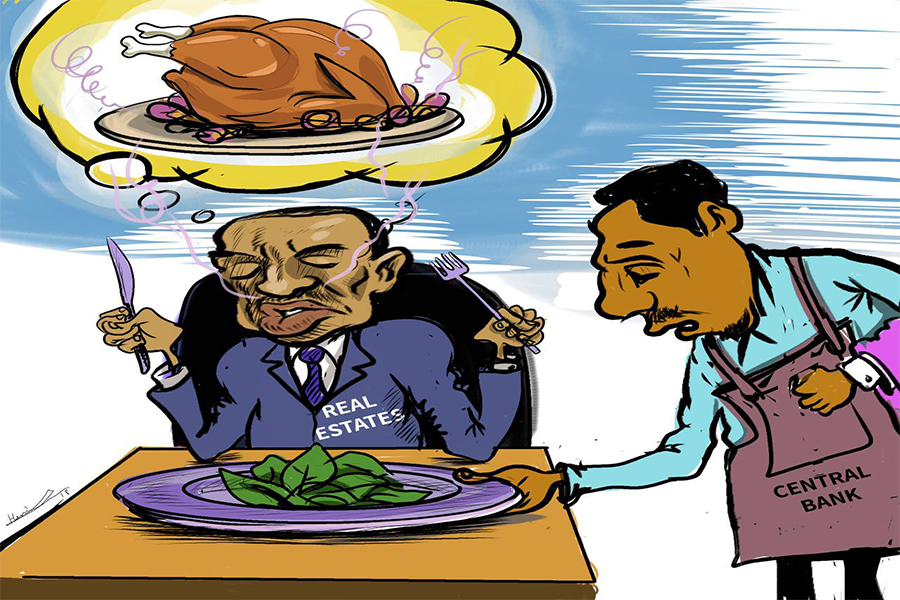

Eyob Tekalegn (PhD) had been in the Governor's chair for only weeks when, on Septembe...

Sep 27 , 2025

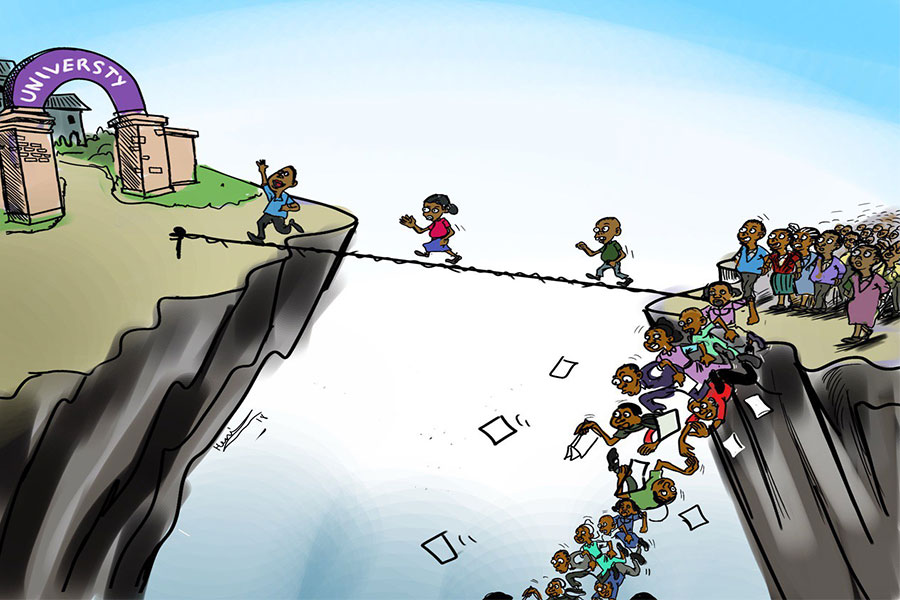

Four years into an experiment with “shock therapy” in education, the national moo...

Sep 20 , 2025

Getachew Reda's return to the national stage was always going to stir attention. Once...

Oct 12 , 2025

Tomato prices in Addis Abeba have surged to unprecedented levels, with retail stands charging between 85 Br and 140 Br a kilo, nearly triple...

Oct 12 , 2025 . By BEZAWIT HULUAGER

A sweeping change in the vehicle licensing system has tilted the scales in favour of electric vehicle (EV...

A simmering dispute between the legal profession and the federal government is nearing a breaking point,...

Oct 12 , 2025 . By NAHOM AYELE

A violent storm that ripped through the flower belt of Bishoftu (Debreziet), 45Km east of the capital, in...