Fortune News | Oct 23,2018

Leaders in Artificial Intelligence (AI), such as OpenAI and DeepMind, view themselves as being in a race to build artificial general intelligence (AGI), a model capable of performing any intellectual task that a human can. At the same time, the US and Chinese governments view the AI race as a national-security priority that demands substantial investments, reminiscent of the Manhattan Project.

In both cases, AI is seen as a new form of "hard power," accessible only to superpowers with vast computational resources and the means to leverage them for economic and military dominance.

But this view is incomplete, and increasingly outdated. Since the Chinese developer DeepSeek launched its lower-cost, competitively performing model earlier this year, we have been in a new era. No longer is the ability to build cutting-edge AI tools confined to a few tech giants. Multiple high-performing models have emerged around the world, showing that AI's true potential lies in its ability to extend soft power.

The era of "bigger-is-better" models came to an end in 2024. Since then, model superiority has not been determined solely by scale (based on ever more data and computing power). DeepSeek proved not only that top-tier models can be built without enormous capital, but also that introducing advanced development techniques can radically accelerate AI progress globally. Dubbed the "Robin Hood of AI democratisation," its decision to go open-source sparked a wave of innovation.

The OpenAI monopoly (or oligopoly of a few companies) of only a few months ago has given way to a multipolar and highly competitive landscape. Alibaba (Qwen) and Moonshot AI (Kimi) in China have also since released powerful open-source models, Sakana AI (my own company) in Japan has open-sourced AI innovations, and the US giant Meta is investing heavily in its open-source Llama program, aggressively recruiting AI talent from other industry leaders.

Boasting state-of-the-art model performance is no longer sufficient to meet the needs of industrial applications. Consider AI chatbots. They can give "70-point" answers to general questions, but they cannot achieve the "99-point" precision or reliability needed for most real-world tasks, from loan evaluations to production scheduling that heavily rely on the collective know-how shared among the experts. The old framework in which foundation models were considered in isolation from specific applications has reached its limits.

Real-world AI is required to handle interdependent tasks, ambiguous procedures, conditional logic, and exception cases, all of which involve messy variables that demand tightly integrated systems. Accordingly, model developers should take more responsibility for the design of specific applications, and app developers should engage more deeply with the foundational technology.

Such integration matters for the future of geopolitics no less than it does for business. This is reflected in the concept of "sovereign AI," which calls for reducing one's dependence on foreign technology suppliers in the name of national AI autonomy.

Historically, the concern outside the United States has been that by outsourcing critical infrastructure, such as search engines, social media and smartphones, to giant Silicon Valley firms, countries incurred persistent digital trade deficits. Were AI to follow the same path, the economic losses could grow exponentially. Many worry about "kill switches" that could shut off foreign-sourced AI infrastructure at any time.

For all these reasons, domestic AI development is now seen as essential. But sovereign AI does not have to mean that every tool is domestically built. In fact, from a cost-efficiency and risk-diversification perspective, it is still better to mix and match models from around the world. The true goal of sovereign AI should not merely be to achieve self-sufficiency, but to amass AI soft power by building models that others want to adopt voluntarily.

Traditionally, soft power has referred to the appeal of ideas like democracy and human rights, cultural exports like Hollywood films, and, more recently, digital technologies and platforms like Facebook, or even more subtly, different apps like WhatsApp or WeChat that shape cultures through daily habits. When diverse AI models coexist globally, the most widely adopted ones will become sources of subtle yet profound soft power, given how embedded they will be in people's everyday decision-making.

From the perspective of AI developers, public acceptance will be critical to success. Many potential users are already wary of Chinese AI systems (and US systems as well), owing to perceived risks of coercion, surveillance, and privacy violations, among other hurdles to widespread adoption. It is easy to imagine that, in the future, only the most trustworthy AIs will be fully embraced by governments, businesses, and individuals. If Japan and Europe can offer such models and systems, they will be well placed to earn the confidence of the Global South, a prospect with far-reaching geopolitical implications.

Trustworthy AI is not merely about eliminating bias or preventing data leaks. In the long run, it should also embody human-centred principles, enhancing, not replacing, people's potential. If AI ends up concentrating wealth and power in the hands of a few, it will deepen inequality and erode social cohesion. The story of AI has only begun, and it need not become a "winner-takes-all" race. But in both the ageing northern hemisphere and the youthful Global South, AI-driven inequality could create lasting divides.

It is in developers' own interest to ensure that the technology is a trusted tool of empowerment, not a pervasive instrument of control.

PUBLISHED ON

Aug 23,2025 [ VOL

26 , NO

1321]

Fortune News | Oct 23,2018

Fortune News | Mar 28,2020

Fortune News | Jun 19,2021

Commentaries | Feb 10,2024

Radar | Sep 21,2019

Fortune News | Jun 23,2019

Radar | Sep 14,2024

Commentaries | Apr 10,2023

Fortune News | Jan 21,2023

Dec 22 , 2024 . By TIZITA SHEWAFERAW

Charged with transforming colossal state-owned enterprises into modern and competitiv...

Aug 18 , 2024 . By AKSAH ITALO

Although predictable Yonas Zerihun's job in the ride-hailing service is not immune to...

Jul 28 , 2024 . By TIZITA SHEWAFERAW

Unhabitual, perhaps too many, Samuel Gebreyohannes, 38, used to occasionally enjoy a couple of beers at breakfast. However, he recently swit...

Jul 13 , 2024 . By AKSAH ITALO

Investors who rely on tractors, trucks, and field vehicles for commuting, transporting commodities, and f...

Oct 25 , 2025

The regulatory machinery is on overdrive. In only two years, no fewer than 35 new pro...

Oct 18 , 2025

The political establishment, notably the ruling party and its top brass, has become p...

Oct 11 , 2025

Ladislas Farago, a roving Associated Press (AP) correspondent, arrived in Ethiopia in...

Oct 4 , 2025

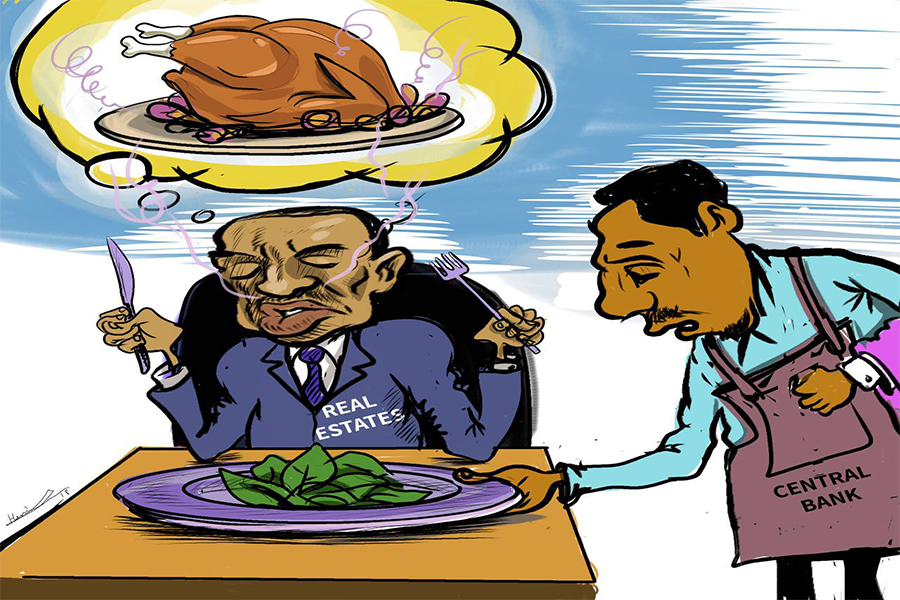

Eyob Tekalegn (PhD) had been in the Governor's chair for only weeks when, on Septembe...